Moving Instrumentbot: v 1.0 Arduino / v 2.0 Pure Data Project

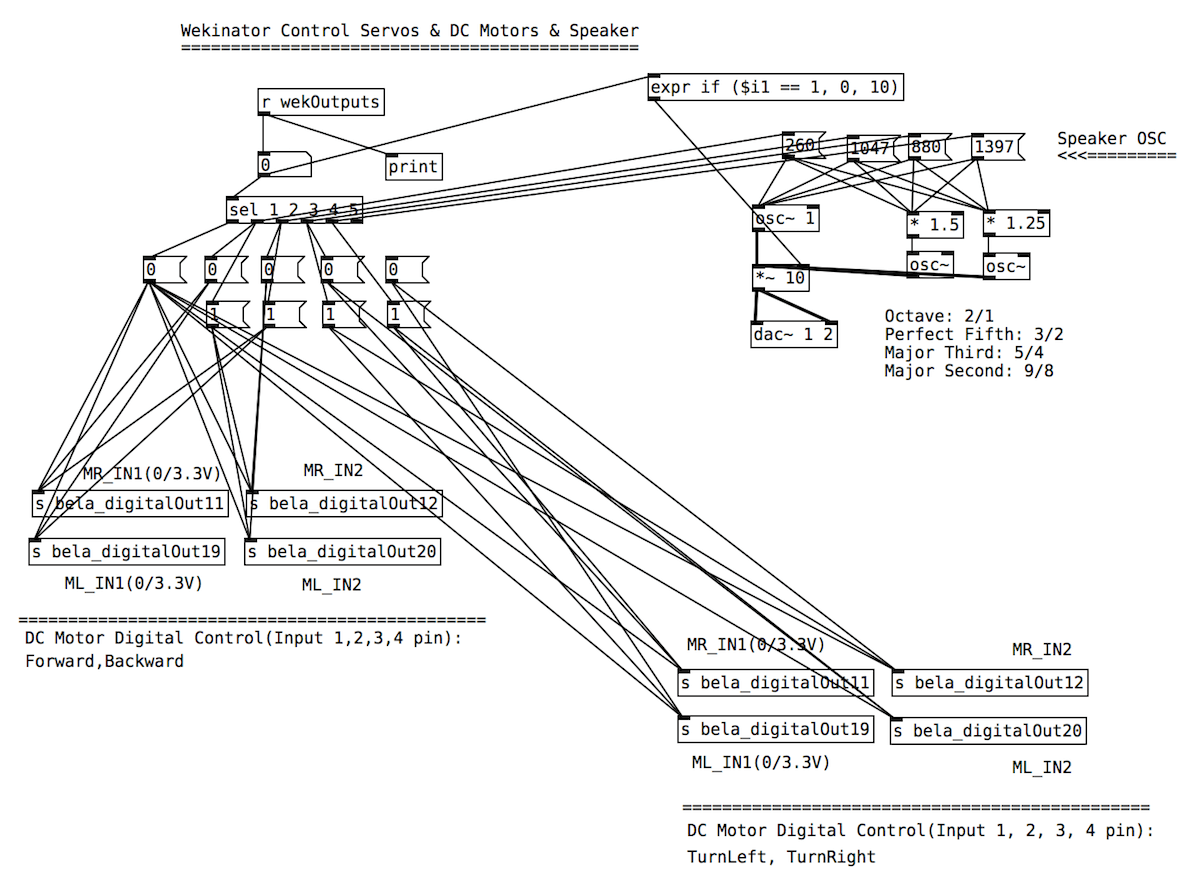

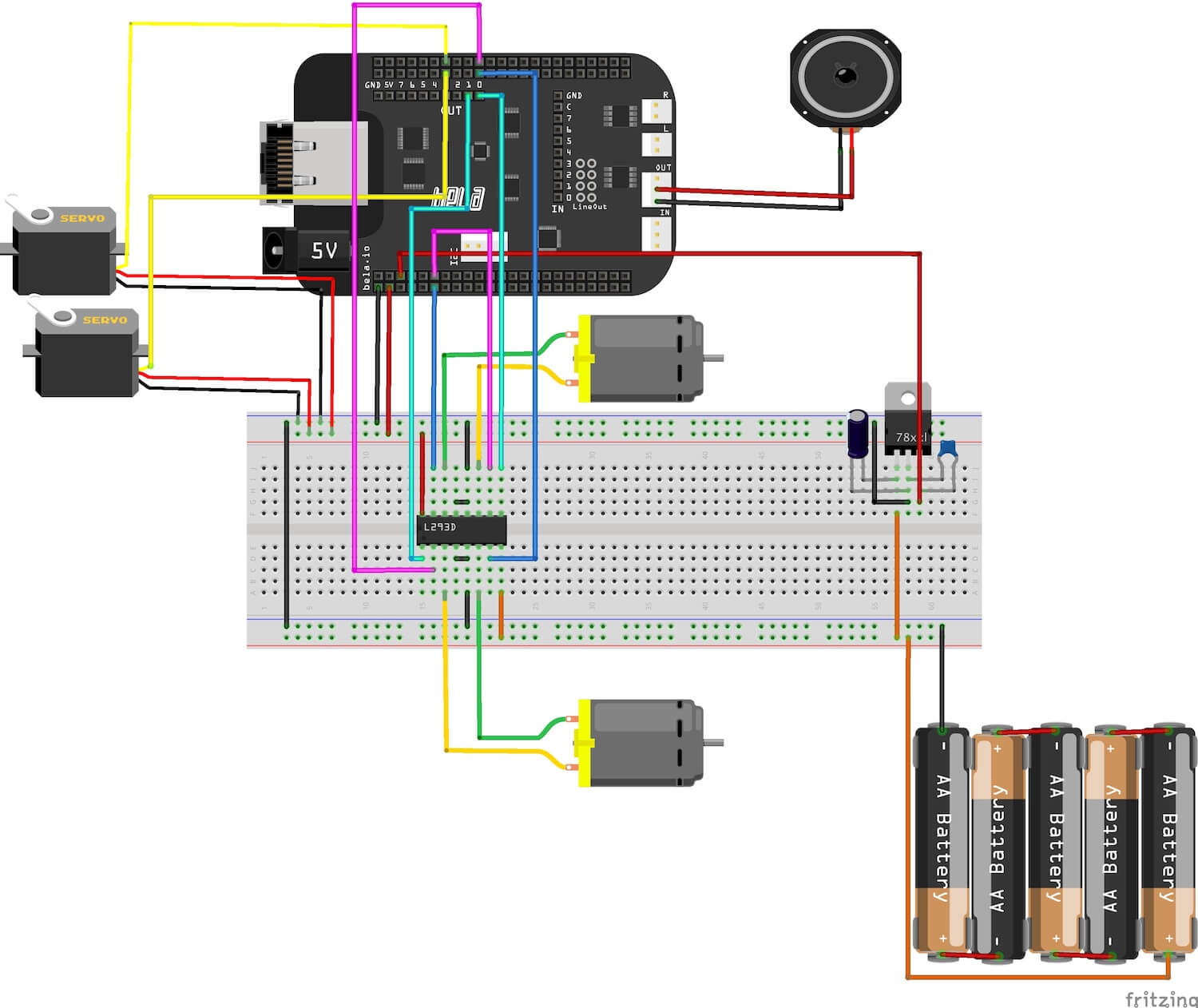

• v 2.0 Physical Interaction Design Course Project: Pure Data / Bela Board / Wekinator / WiFi Dongle / Speaker / DC Motors / Servos

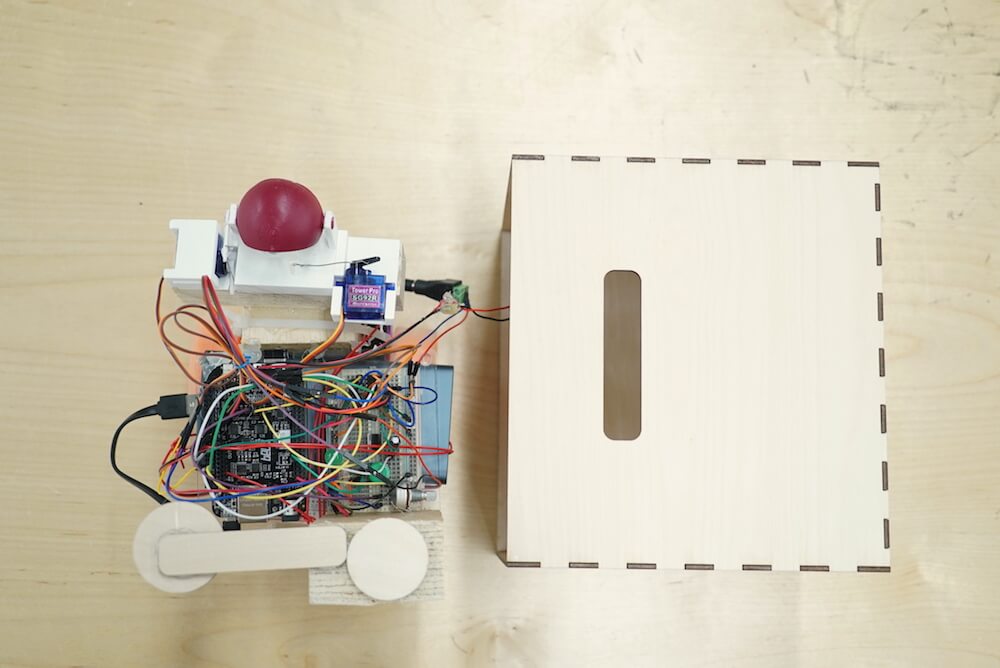

• 25(H)*25(W)*25(D)cm

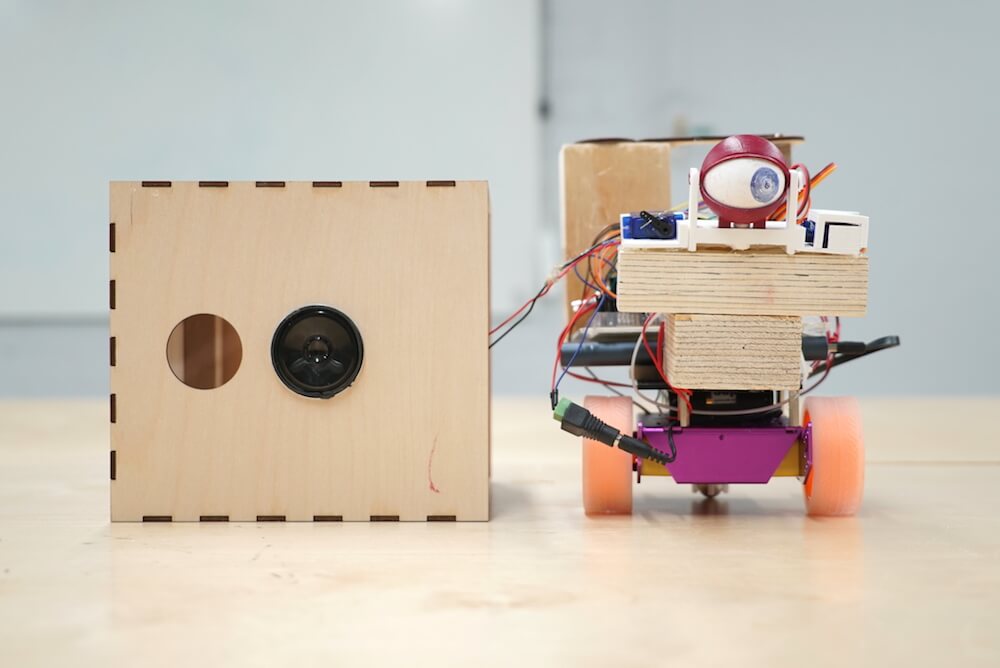

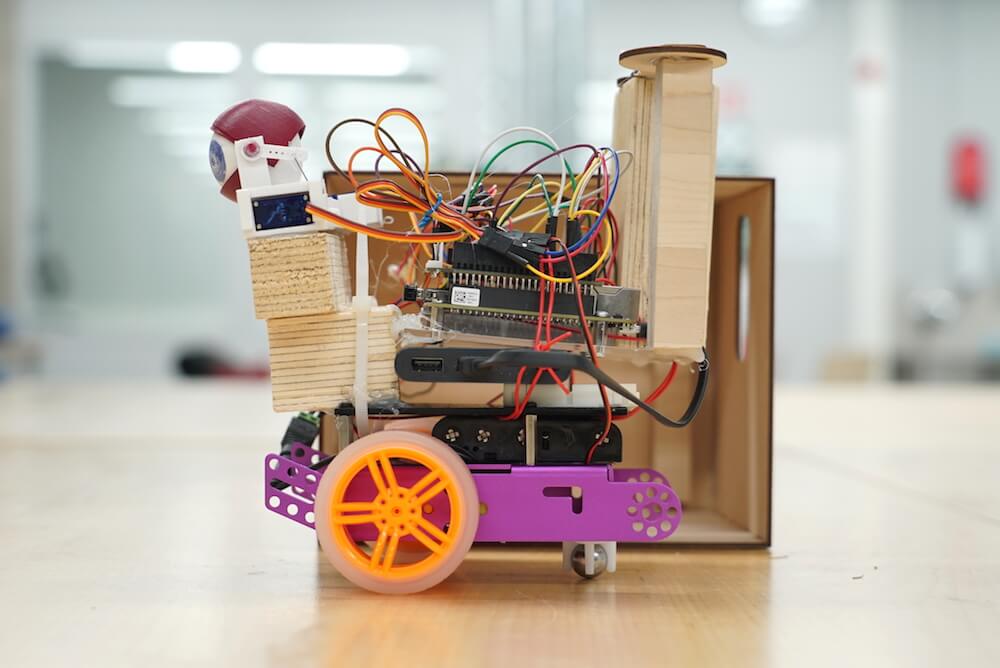

The instrumentbot is built based on the EFA robot as the starting point and then replaced the original Arduino UNO board of another two controller boards — ESP32 and Bela board for each version. The programming languages of these boards are Arduino and Pure Data respectively. There are also three new features added to the instrumentbot — remote control via WiFi(TCP/IP), a moving eye ball controlled by two servo motors and a speaker playing sine waves with several frequencies generated by Pure Data. These extra features make it more interesting to audiences to manipulate the robot intuitively by themselves.

IDEA

The idea is that the instrumentbot can go around by itself with its wheels just like a scary boy’s head attached to a spider body in the “Toy Story” animation, creating an unusual artificial monster. It then plays the electrical sound while it detects the changing axis of the audience’s moving smartphone by receiving OSC data coming from any OSC application of the smartphone via its WiFi dongle, which makes it look like as if it was a live creature as well as making this moving instrumentbot be controlled remotely. Wekinator, which is an useful machine learning toolkit, is then used here to accurately output which axis of the moving smartphone is and then sends that axis class to Pure Data to control a speaker, two servos and two DC motors on the Bela board.

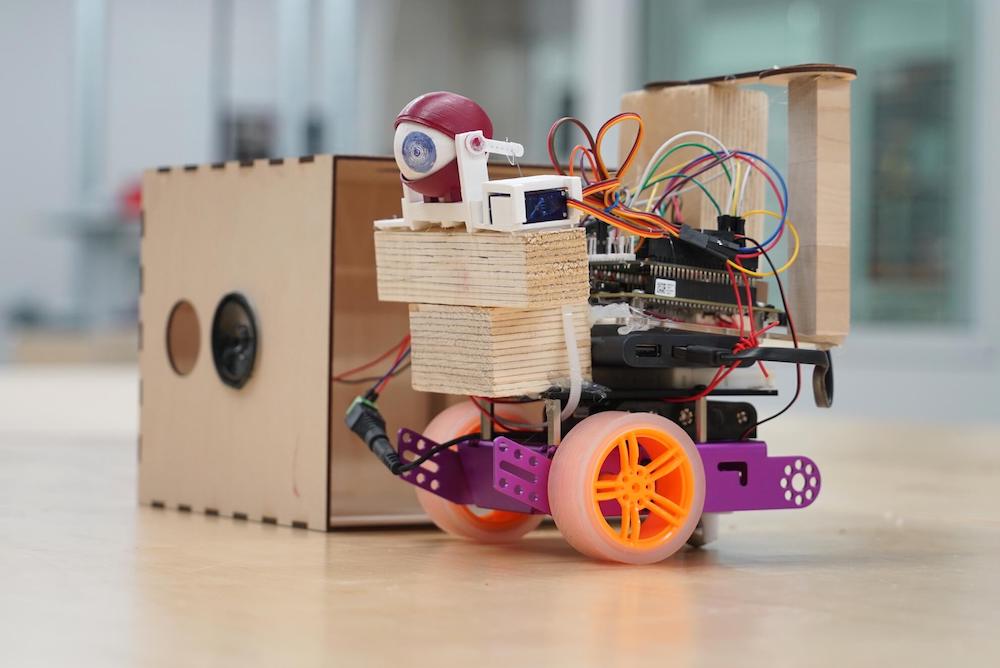

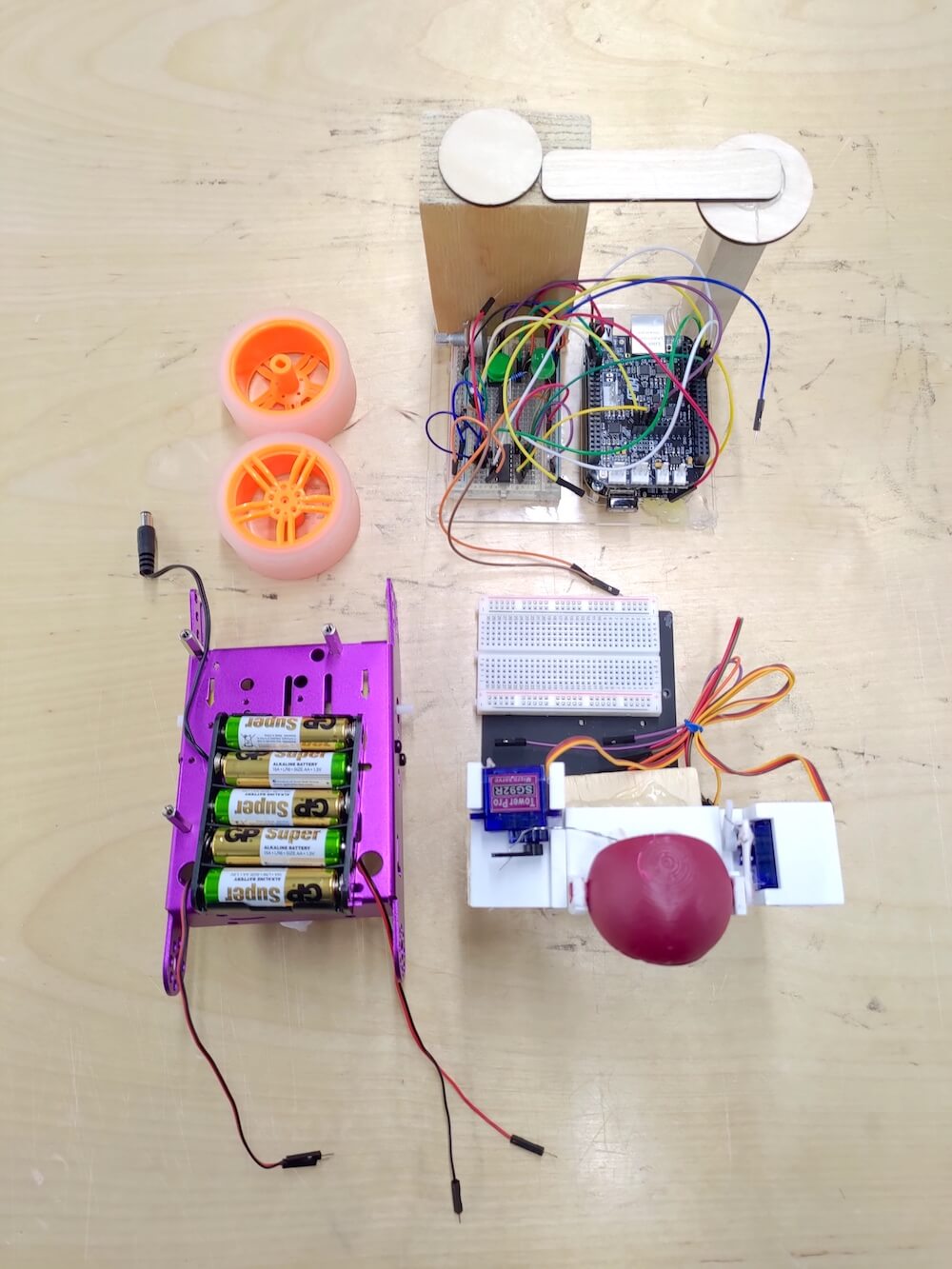

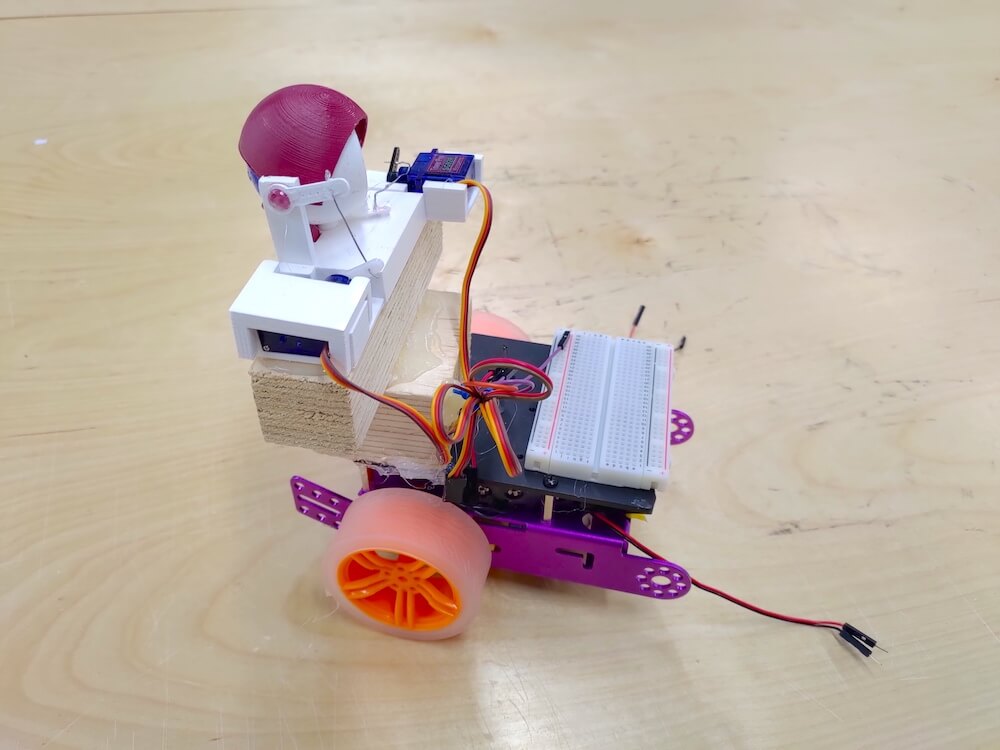

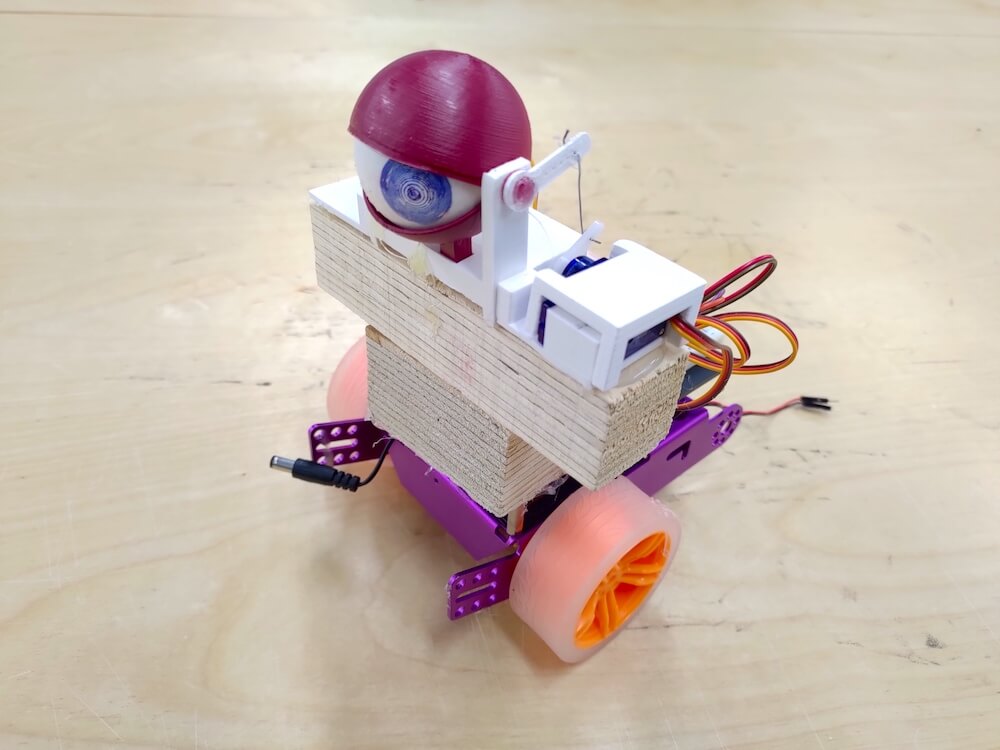

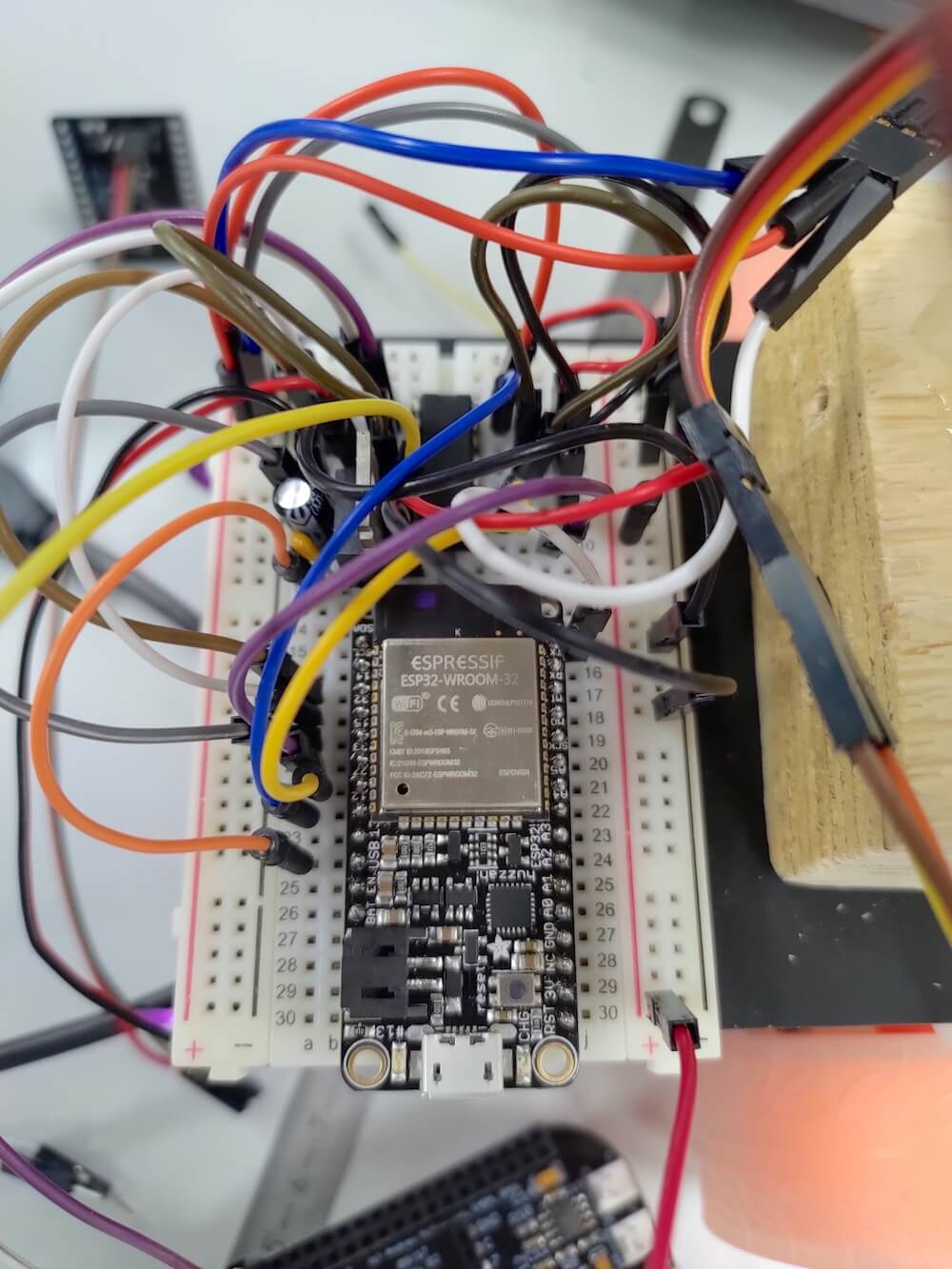

Figure 1. Images above show both inside and outside of the moving instrumentbot.

MECHANISM AND CONSTRUCTION

V 1.0 ARDUINO (ESP32) VERSION

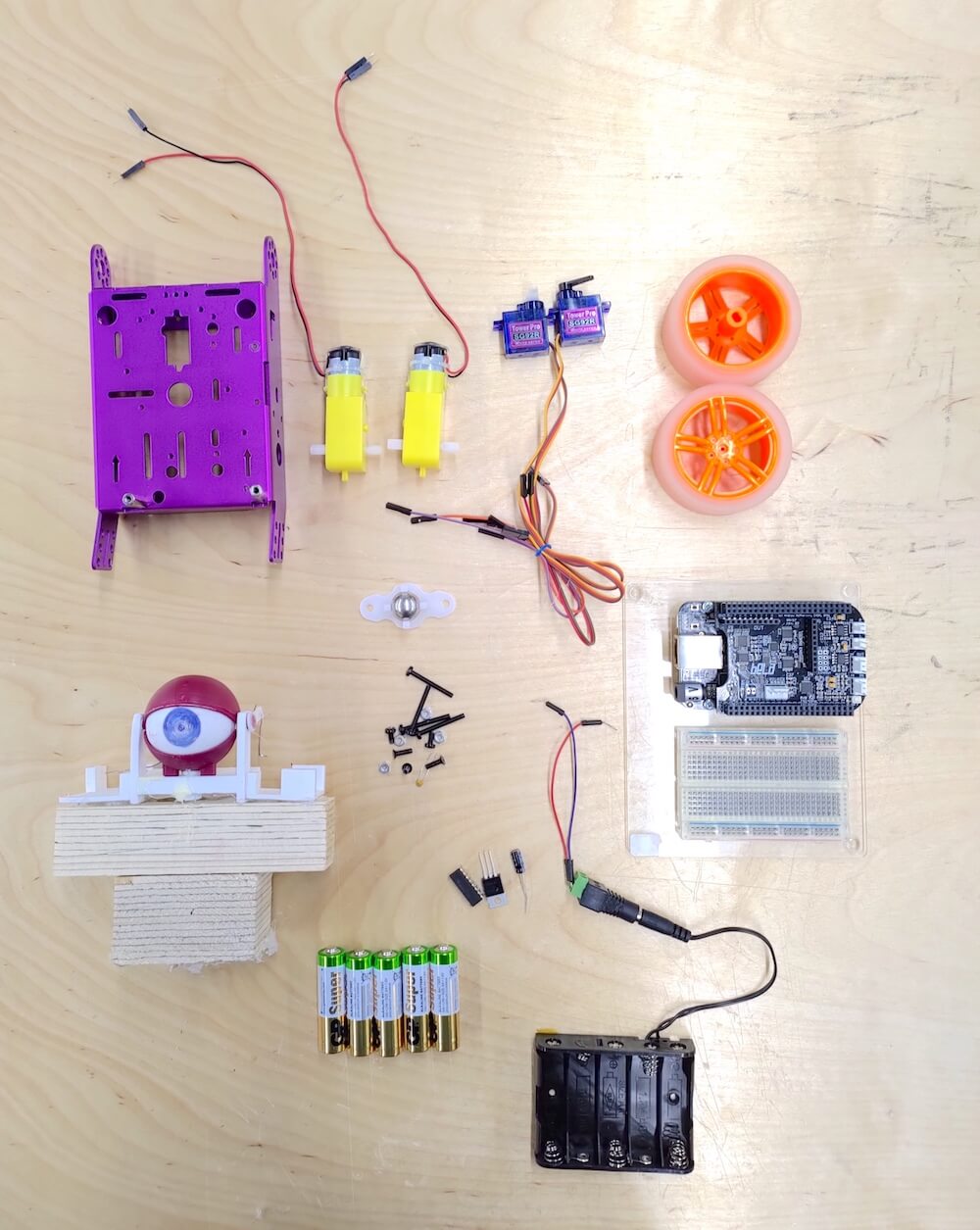

Components and Supplies

| Item | Piece |

|---|---|

| EFA Robot Components (exclude Ultrasonic & Light Sensor) | 1 |

| Adafruit HUZZAH32 – ESP32 Feather Board (replace Arduino UNO) | 1 |

| 3D Printing Eye Ball Model | 1 |

| SG90 Servo Motor | 2 |

| Customized Laser Cutting Wooden Box | 1 |

What Does It Do?

This instrumentbot is controlled remotely via a webpage running on any portable devices with the same network as instrumentbot’s.

The project combines these topics:

- Uploading a customized webpage, which has multiple buttons with different colors, to the server on the robot for controlling its movement

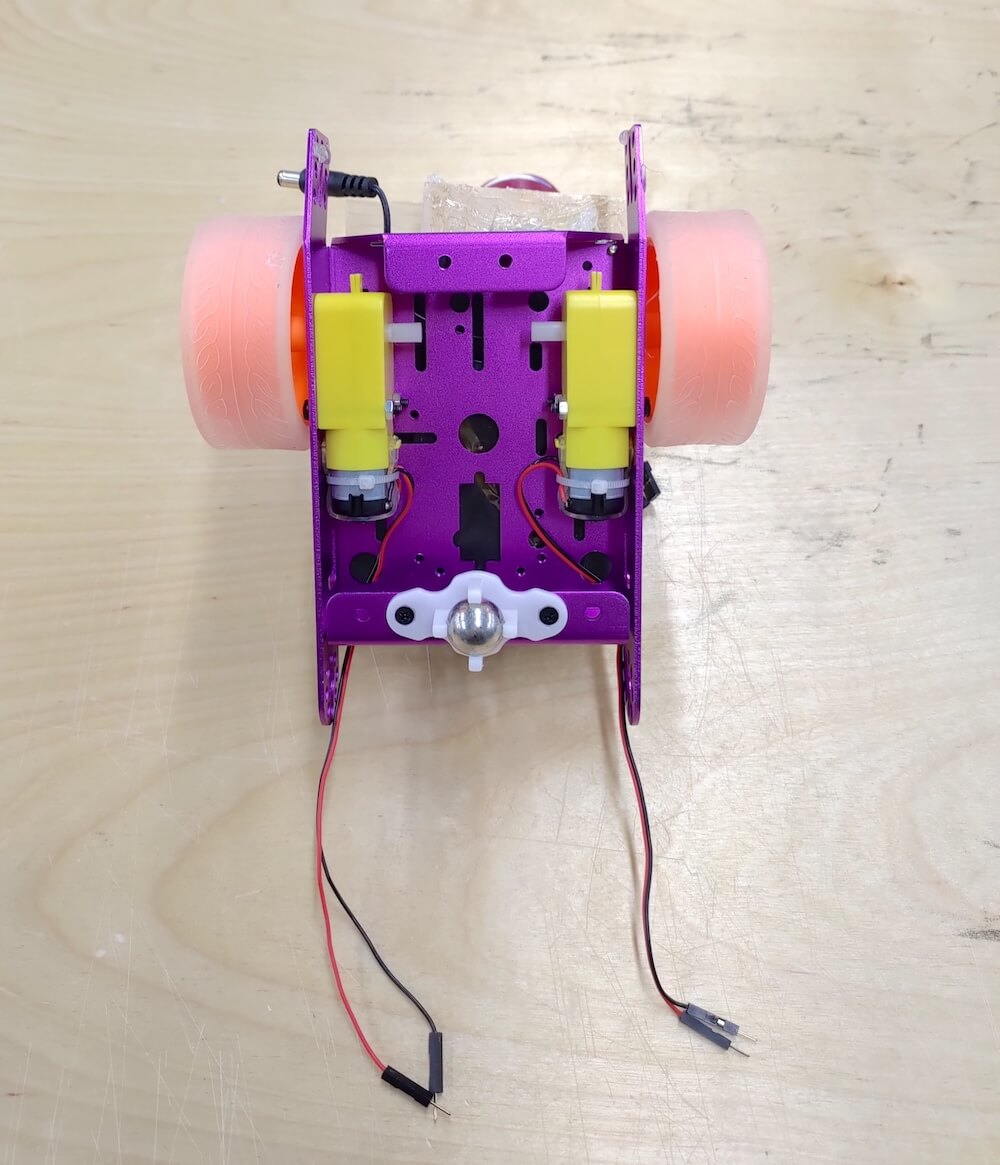

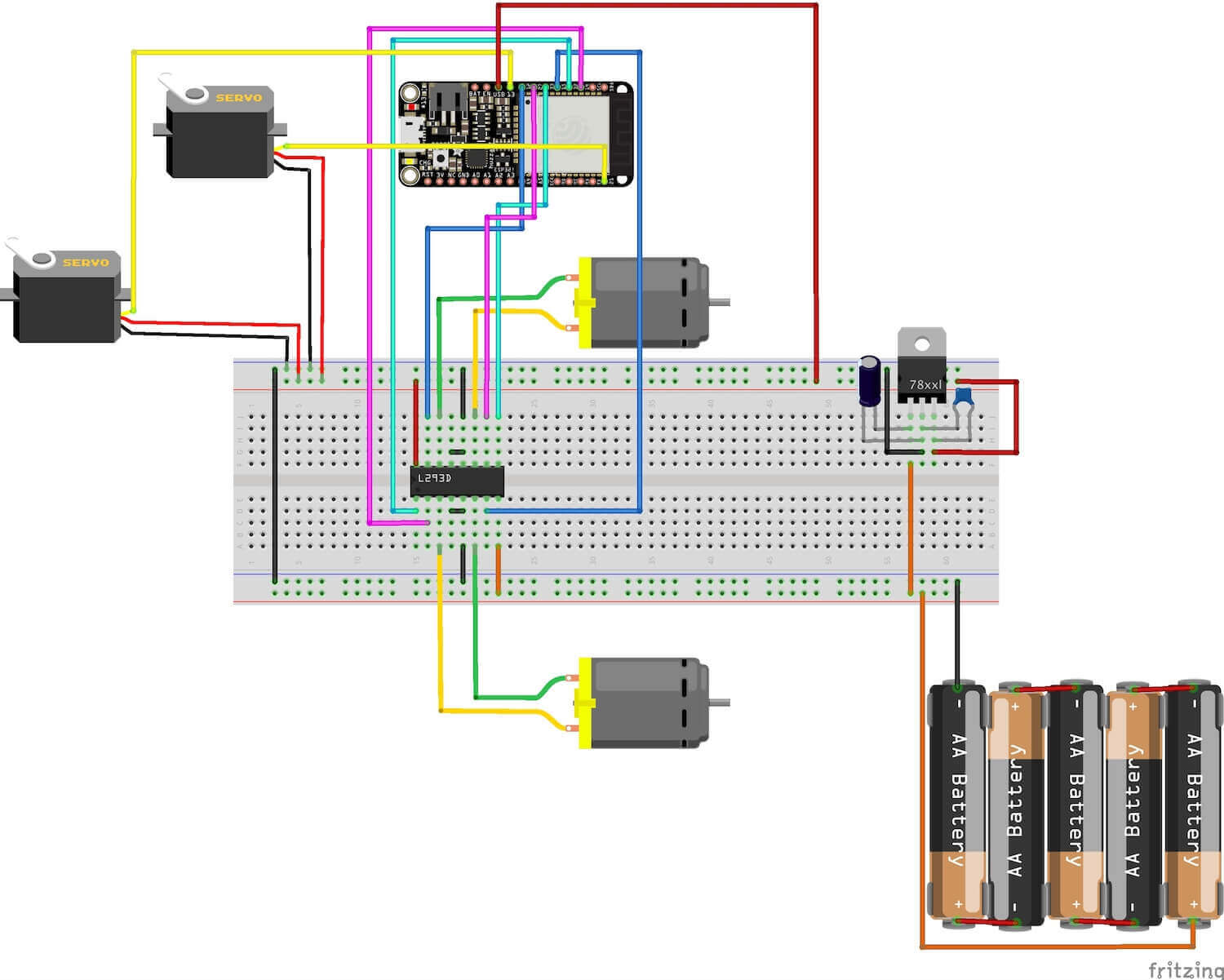

- Controlling DC Motors with the L293D H-Bridge IC by outputting PWM with a specific duty cycle to its ENABLE pins and digital high or low value to its INPUT pins

The instrumentbot is curious about everything around it and wants to start its adventurous trip. However, it doesn’t know the correct direction to adventure and thus rely heavily on audience’s instructions to help it find out a suitable way to move.

The code works like this:

- Displaying a webpage on a specific IP address (the server on the robot) which can be accessed by audience’s smartphone within the same SSID

- The robot moves forward, backward, leftward or rightward according to the command received from the audience’s mobile browser

- The robot turns around its eye ball which is controlled by two servo motors once it stops moving

ESP32 Pin Connection (ESP32 Diagram)

Simple Webpage Controller Demo

The webpage will be built and uploaded to the server on the instrumentbot via Arduino code.

See the Pen ESP32_Intrumentbot_Movement_Control_webpage by 謝宛庭 (@cv47522) on CodePen.

Implementation: Arduino Code

V 2.0 BELA BOARD VERSION

Components and Supplies

| Item | Piece |

|---|---|

| EFA Robot Components (exclude Ultrasonic & Light Sensor) | 1 |

| Bela Cape Starter Kit (replace Arduino UNO) | 1 |

| Asus USB-N10 Nano WiFi-Adapter (Linux Support) | 1 |

| 3D Printing Eye Ball Model | 1 |

| SG90 Servo Motor | 2 |

| 1W Speaker | 1 |

| Customized Laser Cutting Wooden Box | 1 |

| Power Bank (optional or use 7805 Regulator to power on Bela by plugging 5V into P9_06 pin) | 1 |

What Does It Do?

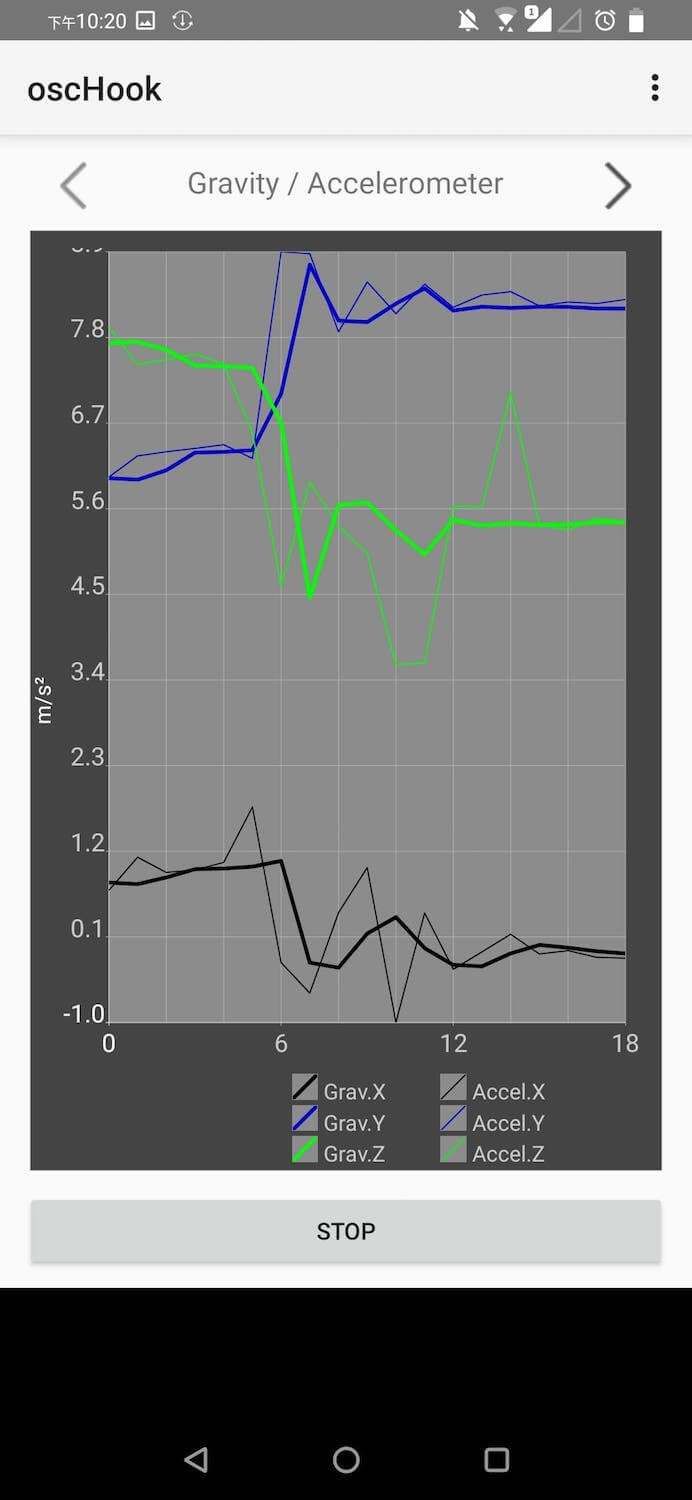

This instrumentbot is controlled remotely by the accelerator of a smartphone within the same network, which means the audience can control the robot by simply turning their smartphone’s face up, down, left or right. Smartphones then identify their orientation through the use of an accelerator, a small device made up of axis-based motion sensing.

The project combines these topics:

- Setting up the WiFi function on Linux operating system, which is run by Bela board, by editing its network interface after plugging the USB WiFi dongle into the Bela board

- Training the axis data received from the smartphone’s OSC application by Wekinator

- Controlling DC Motors with the L293D H-Bridge IC by generating PWM with a specific duty cycle by Pure Data to its ENABLE pins and digital high or low value to its INPUT pins

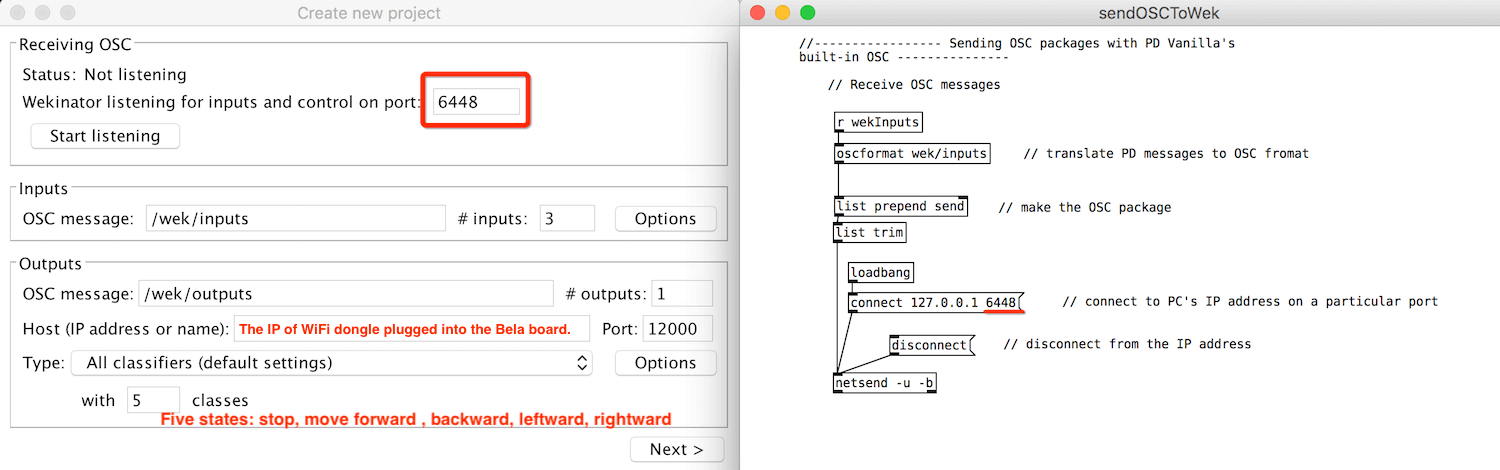

There are there Pd patches fulfilling the entire interaction: getOSC.pd and sendOSCToWek.pd run locally whereas _main.pd runs on the Bela board. The code works like this:

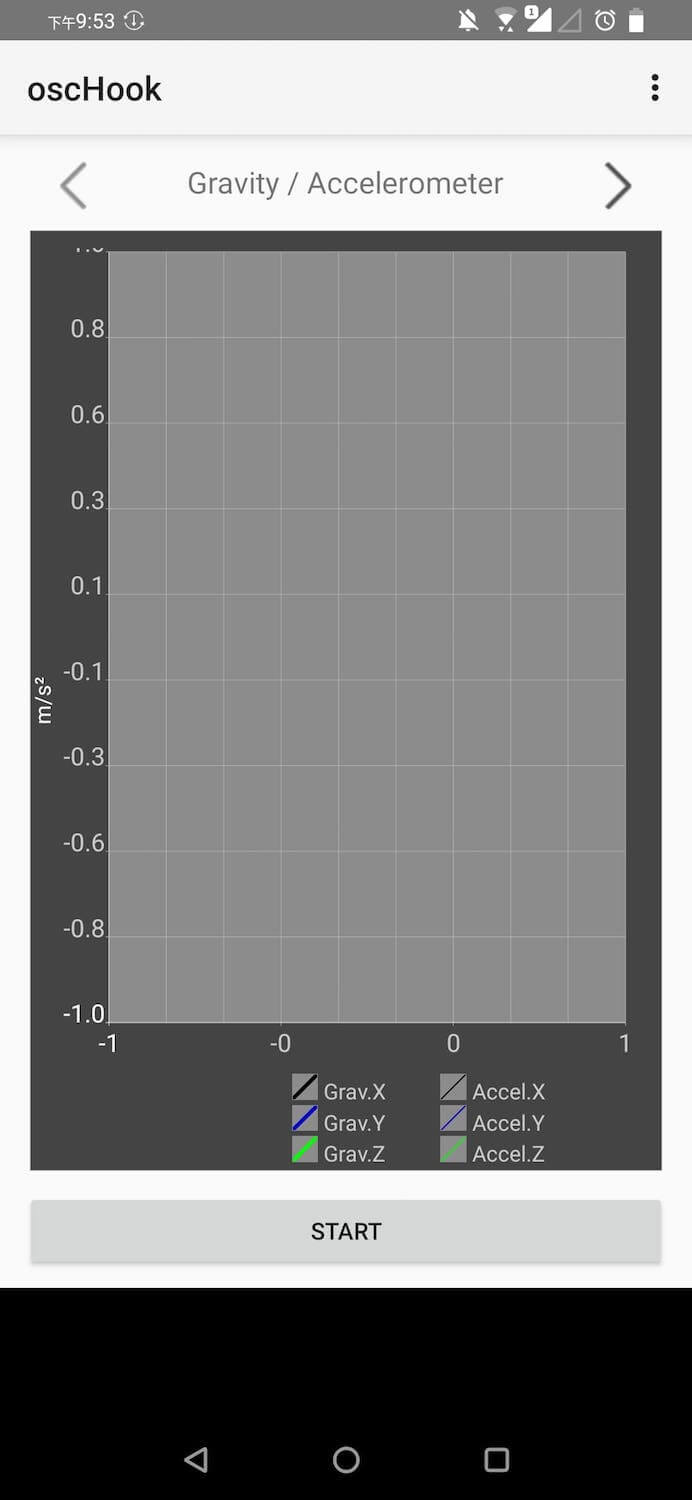

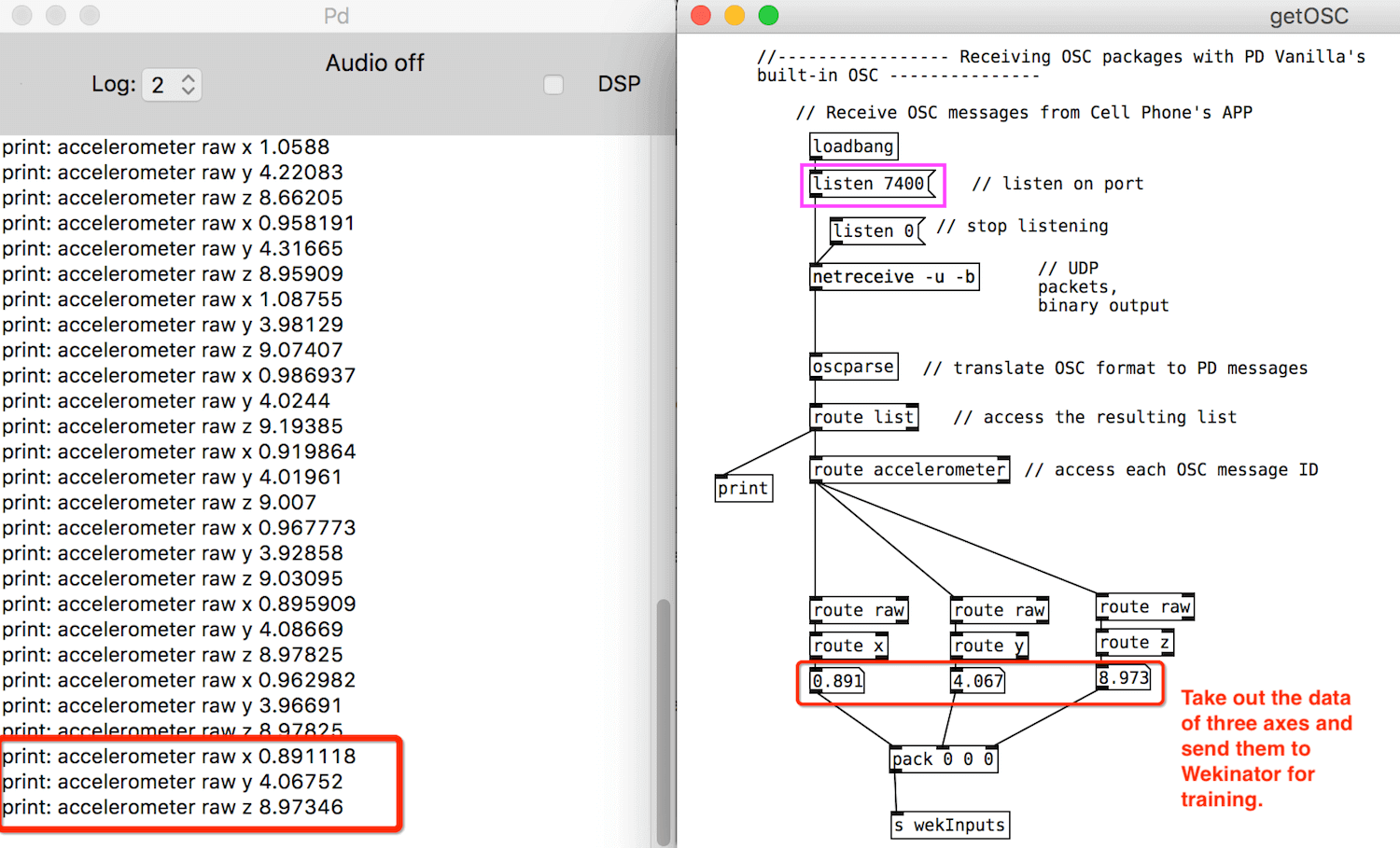

- OSCHook App sends the axis data of the smartphone’s accelerator in OSC format to getOSC.pd

- getOSC.pd unpacks the axis data and only sends the number value to sendOSCToWek.pd

- sendOSCToWek.pd then sends pure number data receiving from getOSC.pd to Wekinator and listens to the same port(6448) as Wekinator’s

- Wekinator outputs the trained data in the format of class(1 to 5) to _main.pd run by Bela board installed on the instrumentbot

- The robot moves when it receives commands from the _main.pd which value of the class data is received from Wekinator:

- The instrumentbot doesn’t move but starts looking around while the phone is placed flatwise

- The robot moves forward and stops looking around while the phone is placed upside down

- The robot moves backward while the phone is placed vertically against a table

- The robot turns right while the phone is placed rightward

- The robot turns left while the phone is placed leftward

Bela Board Pin Connection (Bela Cape Diagram)

Implementation: Pd OSC Communication and Machine Learning Toolkit (Wekinator)

Step 0. Connecting Bela to WiFi

Edit Bela’s network interface by following the command lines from the above tutorial link after plugging the USB WiFi dongle into the Bela board.

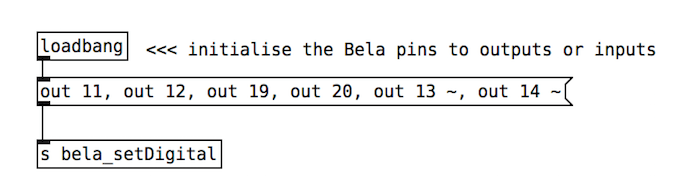

Step 1. Initialize Bela pin mode.

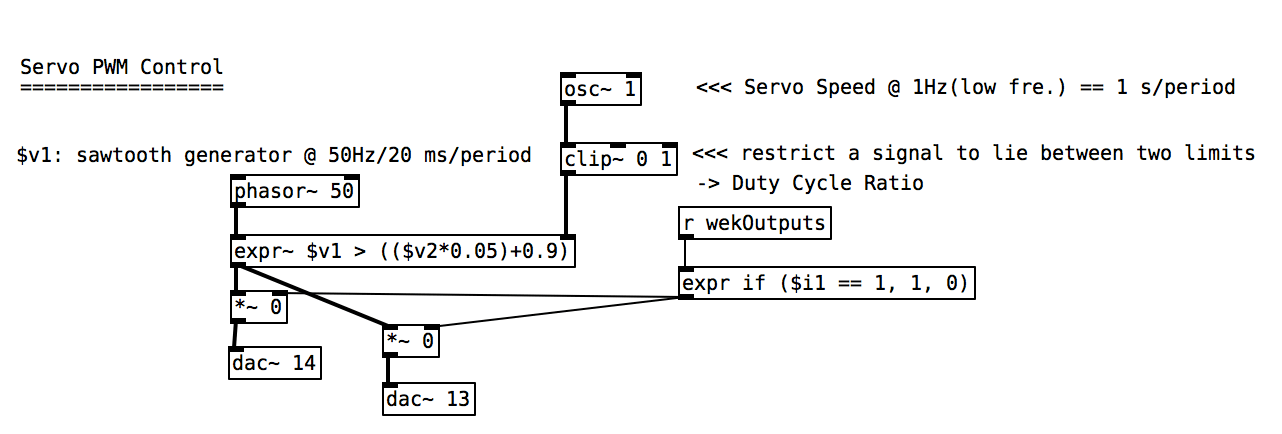

Step 2. Generate PWM and output it to control the angle of two servos.

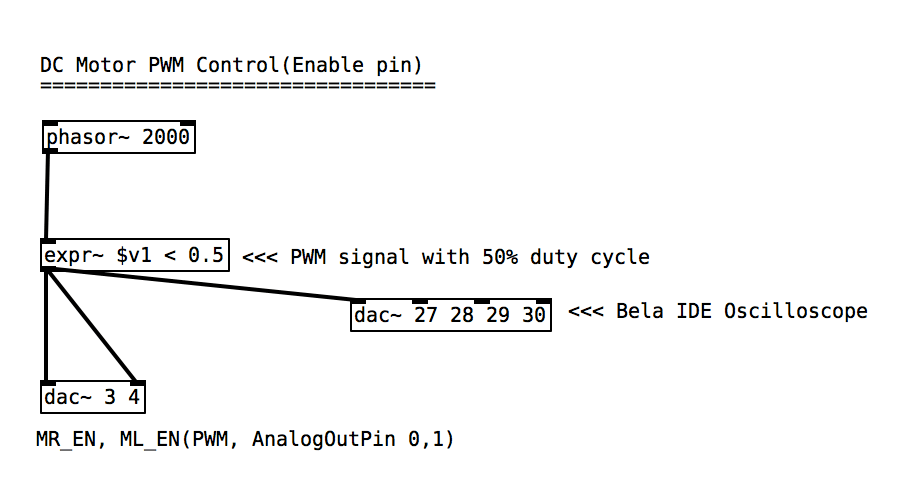

Step 3. Generate PWM and output it to L293D’s ENABLE pins to control the speed of DC motors.

Step 4. Send accelerator data in OSC format from smartphone’s OSC App to PC which runs getOSC Pd patch.

Step 5. PC receives axes’ data from the smartphone and reorganizes it into pure floating-point values.

Step 6. Wekinator gets pure axis data from sendOSCToWek Pd patch running on the same port.

Step 7. The values of L293D’s four INPUT pins are set high or low according to the type of output classes trained by Wekinator. The frequency played by the speaker is also controlled by it.